LARGE SCALE GAN TRAINING FOR HIGH FIDELITY NATURAL IMAGE SYNTHESIS

Scene 1 (0s)

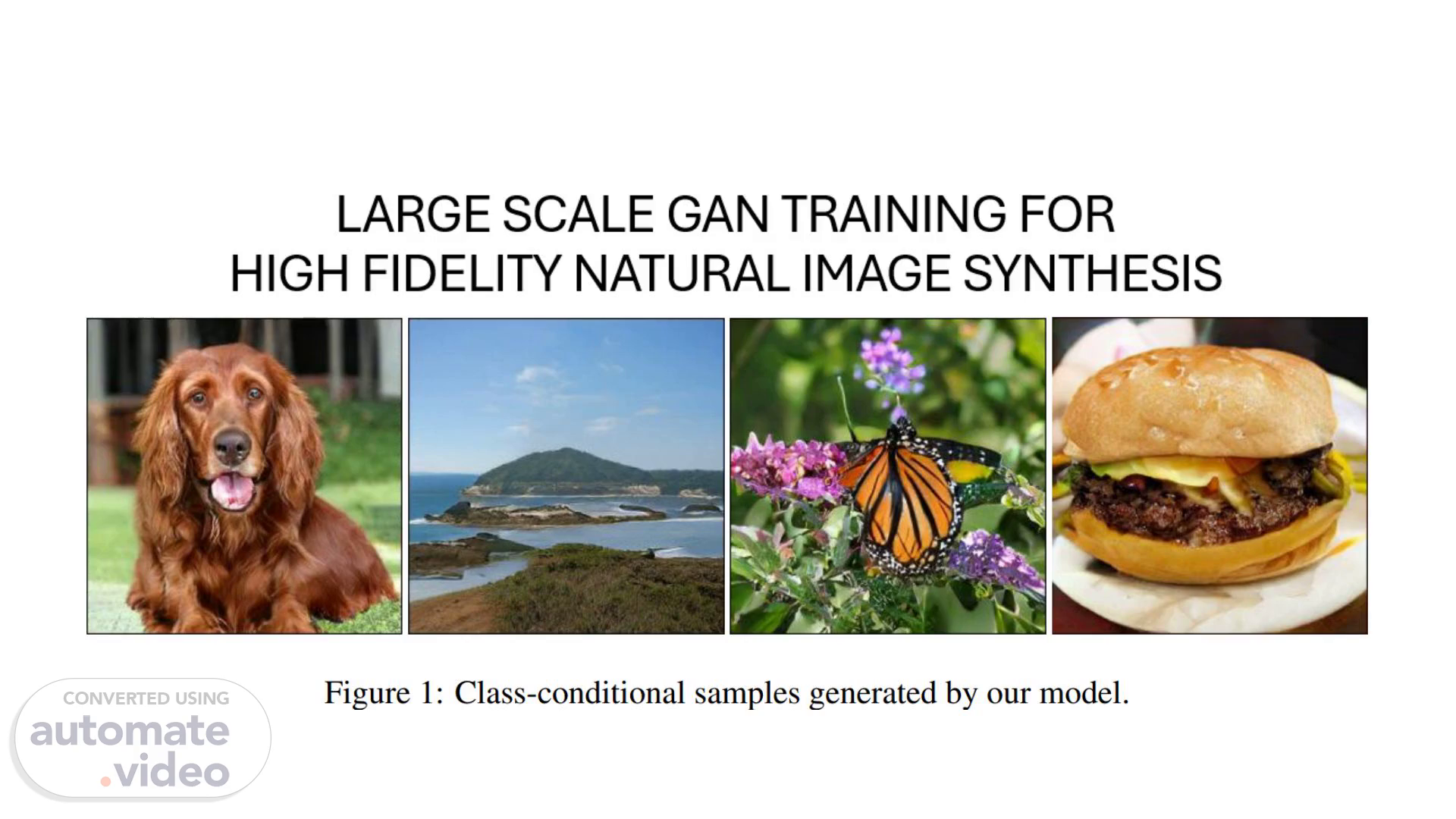

[Audio] Hi, I am presenting this article named LARGE SCALE GAN TRAINING FOR HIGH FIDELITY NATURAL IMAGE SYNTHESIS. This paper was an oral presentation at ICLR 2019. The team consisted of three researchers: Brock from Heriot-Watt University, Donahue and Simonyan from Google DeepMind..

Scene 2 (22s)

[Audio] Recent advancements in image generation struggle to produce high-resolution, diverse samples from datasets like ImageNet. Generative Adversarial Networks (GANs) offer promise but face challenges in stability and performance. Researchers propose a solution: applying orthogonal regularization to the generator, allowing control over sample fidelity and diversity via latent space truncation. This modification achieves state-of-the-art results in class-conditioned image synthesis on ImageNet, addressing instability challenges inherent in large-scale GAN training..

Scene 3 (1m 9s)

[Audio] The study finds that stability in training doesn't stem solely from the generator or discriminator but emerges from their interaction. Symptoms of pathological conditions can be tracked, showing the need for reasonable training conditions. Tight constraints on the discriminator can ensure stability but harm sample quality. Loosening this condition post satisfactory training can improve data generation effectiveness..

Scene 4 (2m 48s)

[Audio] Their study aims to improve GAN training by extending it to larger models and batches. They use SA-GAN architecture, hinge loss GAN objective function, and conditional batch normalization for class conditioning. They optimize by adding Spectral Norm in the generator, halving the learning rate, and conducting two discriminator steps for every generator step. They evaluate using a moving average of generator weights with a decay rate of 0.9999. Models are initialized orthogonally and trained on Google TPUv3 Pods, with cross-device BatchNorm statistics computation for the generator to enhance performance and scalability..

Scene 5 (3m 33s)

[Audio] Inception Distance and Inception Score measure performance, with lower ID and higher IS indicating better results. The table's first four rows show that increasing batch size boosts performance notably. The article explains that larger batches cover more patterns, enhancing gradients for both generator and discriminator networks..

Scene 6 (4m 33s)

[Audio] Furthermore, another advantage of increasing the batch size is the reduction in training time required to achieve the specified performance. For example, in the table, for a batch size of 256, FID reaches 18.65 after iterating 1000*10^3 times, whereas for a batch size of 2048, it only takes 732*10^3 iterations to reach an ID of 12.39.

Scene 7 (5m 11s)

[Audio] However, is increasing the batch size always better? Certainly not. The article mentions that for larger batch sizes, the model training becomes more unstable and prone to mode collapse, where the generator output becomes singular or similar for different latent variables..

Scene 8 (5m 28s)

[Audio] The authors boosted the width of each layer in both the generator and discriminator by 50%, leading to a 21% increase in IS performance as evident from rows 4 and 5 of the table. They propose that augmenting network complexity can bolster performance, particularly for intricate datasets. However, despite this adjustment, adding more layers did not yield any performance enhancement..

Scene 9 (6m 9s)

[Audio] Previous GAN models typically utilized standard normal or uniform distributions for the latent space prior. However, this may not be ideal. The Truncation Trick, introduced by the authors, addresses this issue by enabling sampling with a different latent distribution, improving sample quality. It balances individual sample quality and overall diversity but diminishes diversity as the threshold decreases, causing elements of the latent space to converge toward the mode of the generator's output distribution..

Scene 10 (6m 43s)

[Audio] The authors introduce orthogonal regularization to enhance model adaptability to the Truncation Trick, allowing fine-tuning between sample quality and diversity. By computing FID and IS across different thresholds, they generate a variety-fidelity curve akin to a precision-recall curve. IS increases with lower truncation thresholds, resembling precision, while FID initially improves but sharply drops as truncation nears zero, resembling recall. However, some larger models exhibit saturation artifacts when truncated noise is used. To address this, they enforce amenability to truncation by conditioning the generator to be smooth through Orthogonal Regularization. This regularization, governed by a hyperparameter β, ensures orthogonality among weight matrices. Several relaxed variants are explored, with the best-performing version removing diagonal terms and minimizing pairwise cosine similarity between filters. A small penalty of 10^-4 on β improves the likelihood of model amenability to truncation, with models trained using Orthogonal Regularization showing a 60% amenability rate compared to 16% without it..

Scene 11 (8m 2s)

[Audio] Previous research has mostly looked at GAN stability on small-scale problems, but the issues we're seeing arise on larger scales. To understand these instabilities, we track various statistics during training. They found that analyzing the top three singular values of each weight matrix is most helpful. These values can be calculated efficiently using a method called Arnoldi iteration. They notice that most layers in the generator behave well, but some, especially the first layer, become unstable during training..

Scene 12 (8m 35s)

[Audio] To see if this instability causes the collapse of training or is just a symptom, we experiment with adding extra constraints to generator to counteract the instability. They try different methods like regularizing the top singular values of each weight or clamping them to certain values. However, these techniques only partially improve stability and don't prevent training collapse. So, we shift our focus to studying the discriminator..

Scene 13 (9m 1s)

[Audio] The discriminator's weight spectra show noise but remain stable overall, with singular values increasing steadily. Spikes occur during collapse, suggesting periodic large gradients from strong generator perturbations..

Scene 14 (10m 13s)

[Audio] R1 gradient penalty was added for stability, but it hurts performance. Even lowering it still harms performance. The discriminator's loss drops drastically during training, then spikes at collapse, indicating overfitting. Testing on ImageNet confirms this, with the discriminator excelling on training data but performing no better than chance on validation. It suggests the discriminator prioritizes giving feedback to the generator over generalizing..

Scene 15 (10m 43s)

[Audio] Stabilizing the discriminator compromises performance as it tends to memorize rather than generalize. Training stability relies on the interaction between the generator and discriminator. Although constraining the discriminator aids stability, it harms performance. The optimal strategy is to ease constraints, enabling training until later stages when pattern collapse risks arise..

Scene 16 (11m 11s)

[Audio] This paper demonstrates the advantages of scaling up GANs for modeling natural images. It improves fidelity and diversity in generated samples, outperforming existing ImageNet GAN models. The research examines large-scale GAN training, focusing on stability measured by weight matrix singular values, uncovering insights into stability-performance dynamics. Notably, the experiment produces 512-pixel images using 512 Google TPUs over 48 hours, presenting replication challenges..